Installing GitLab on Amazon Web Services (AWS)

This page offers a walkthrough of a common configuration for GitLab on AWS. You should customize it to accommodate your needs.

NOTE: Note For organizations with 300 users or less, the recommended AWS installation method is to launch an EC2 single box Omnibus Installation and implement a snapshot strategy for backing up the data.

Introduction

For the most part, we'll make use of Omnibus GitLab in our setup, but we'll also leverage native AWS services. Instead of using the Omnibus bundled PostgreSQL and Redis, we will use AWS RDS and ElastiCache.

In this guide, we'll go through a multi-node setup where we'll start by configuring our Virtual Private Cloud and subnets to later integrate services such as RDS for our database server and ElastiCache as a Redis cluster to finally manage them within an auto scaling group with custom scaling policies.

Requirements

In addition to having a basic familiarity with AWS and Amazon EC2, you will need:

- An AWS account

- To create or upload an SSH key to connect to the instance via SSH

- A domain name for the GitLab instance

- An SSL/TLS certificate to secure your domain. If you do not already own one, you can provision a free public SSL/TLS certificate through AWS Certificate Manager(ACM) for use with the Elastic Load Balancer we'll create.

NOTE: Note: It can take a few hours to validate a certificate provisioned through ACM. To avoid delays later, request your certificate as soon as possible.

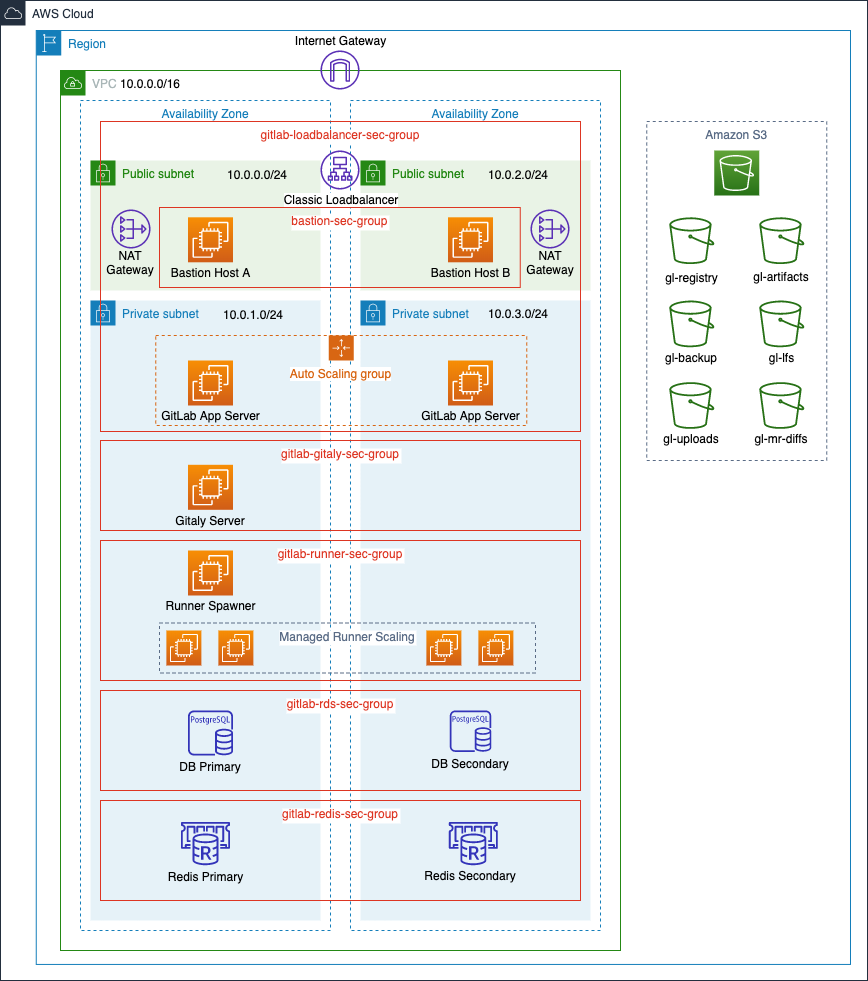

Architecture

Below is a diagram of the recommended architecture.

AWS costs

Here's a list of the AWS services we will use, with links to pricing information:

- EC2: GitLab will deployed on shared hardware which means on-demand pricing will apply. If you want to run it on a dedicated or reserved instance, consult the EC2 pricing page for more information on the cost.

- S3: We will use S3 to store backups, artifacts, LFS objects, etc. See the Amazon S3 pricing.

- ELB: A Classic Load Balancer will be used to route requests to the GitLab instances. See the Amazon ELB pricing.

- RDS: An Amazon Relational Database Service using PostgreSQL will be used. See the Amazon RDS pricing.

- ElastiCache: An in-memory cache environment will be used to provide a Redis configuration. See the Amazon ElastiCache pricing.

Create an IAM EC2 instance role and profile

As we'll be using Amazon S3 object storage, our EC2 instances need to have read, write, and list permissions for our S3 buckets. To avoid embedding AWS keys in our GitLab config, we'll make use of an IAM Role to allow our GitLab instance with this access. We'll need to create an IAM policy to attach to our IAM role:

Create an IAM Policy

- Navigate to the IAM dashboard and click on Policies in the left menu.

- Click Create policy, select the

JSONtab, and add a policy. We want to follow security best practices and grant least privilege, giving our role only the permissions needed to perform the required actions.- Assuming you prefix the S3 bucket names with

gl-as shown in the diagram, add the following policy:

- Assuming you prefix the S3 bucket names with

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"s3:AbortMultipartUpload",

"s3:CompleteMultipartUpload",

"s3:ListBucket",

"s3:PutObject",

"s3:GetObject",

"s3:DeleteObject",

"s3:PutObjectAcl"

],

"Resource": [

"arn:aws:s3:::gl-*/*"

]

}

]

}- Click Review policy, give your policy a name (we'll use

gl-s3-policy), and click Create policy.

Create an IAM Role

- Still on the IAM dashboard, click on Roles in the left menu, and click Create role.

- Create a new role by selecting AWS service > EC2, then click Next: Permissions.

- In the policy filter, search for the

gl-s3-policywe created above, select it, and click Tags. - Add tags if needed and click Review.

- Give the role a name (we'll use

GitLabS3Access) and click Create Role.

We'll use this role when we create a launch configuration later on.

Configuring the network

We'll start by creating a VPC for our GitLab cloud infrastructure, then we can create subnets to have public and private instances in at least two Availability Zones (AZs). Public subnets will require a Route Table keep and an associated Internet Gateway.

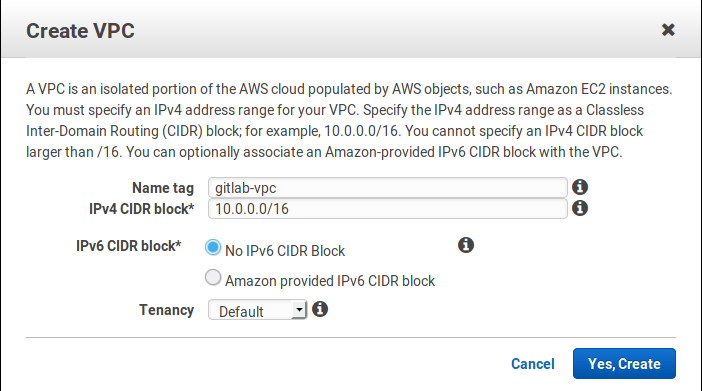

Creating the Virtual Private Cloud (VPC)

We'll now create a VPC, a virtual networking environment that you'll control:

-

Navigate to https://console.aws.amazon.com/vpc/home.

-

Select Your VPCs from the left menu and then click Create VPC. At the "Name tag" enter

gitlab-vpcand at the "IPv4 CIDR block" enter10.0.0.0/16. If you don't require dedicated hardware, you can leave "Tenancy" as default. Click Yes, Create when ready. -

Select the VPC, click Actions, click Edit DNS resolution, and enable DNS resolution. Hit Save when done.

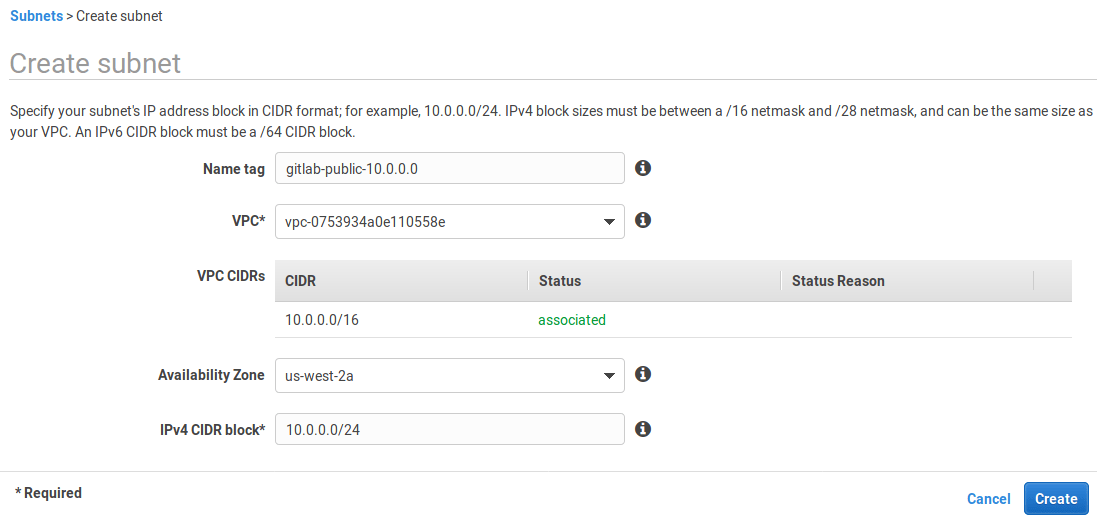

Subnets

Now, let's create some subnets in different Availability Zones. Make sure that each subnet is associated to the VPC we just created and that CIDR blocks don't overlap. This will also allow us to enable multi AZ for redundancy.

We will create private and public subnets to match load balancers and RDS instances as well:

-

Select Subnets from the left menu.

-

Click Create subnet. Give it a descriptive name tag based on the IP, for example

gitlab-public-10.0.0.0, select the VPC we created previously, select an availability zone (we'll useus-west-2a), and at the IPv4 CIDR block let's give it a 24 subnet10.0.0.0/24: -

Follow the same steps to create all subnets:

Name tag Type Availability Zone CIDR block gitlab-public-10.0.0.0public us-west-2a10.0.0.0/24gitlab-private-10.0.1.0private us-west-2a10.0.1.0/24gitlab-public-10.0.2.0public us-west-2b10.0.2.0/24gitlab-private-10.0.3.0private us-west-2b10.0.3.0/24 -

Once all the subnets are created, enable Auto-assign IPv4 for the two public subnets:

- Select each public subnet in turn, click Actions, and click Modify auto-assign IP settings. Enable the option and save.

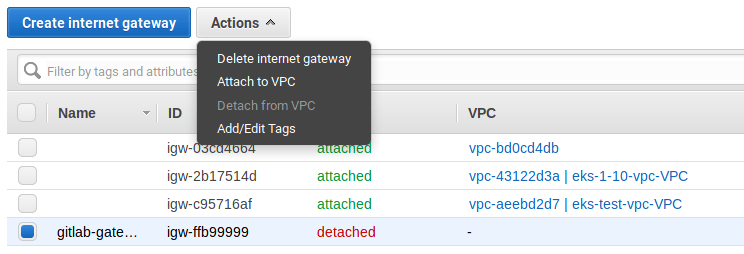

Internet Gateway

Now, still on the same dashboard, go to Internet Gateways and create a new one:

-

Select Internet Gateways from the left menu.

-

Click Create internet gateway, give it the name

gitlab-gatewayand click Create. -

Select it from the table, and then under the Actions dropdown choose "Attach to VPC".

-

Choose

gitlab-vpcfrom the list and hit Attach.

Create NAT Gateways

Instances deployed in our private subnets need to connect to the internet for updates, but should not be reachable from the public internet. To achieve this, we'll make use of NAT Gateways deployed in each of our public subnets:

- Navigate to the VPC dashboard and click on NAT Gateways in the left menu bar.

- Click Create NAT Gateway and complete the following:

-

Subnet: Select

gitlab-public-10.0.0.0from the dropdown. - Elastic IP Allocation ID: Enter an existing Elastic IP or click Allocate Elastic IP address to allocate a new IP to your NAT gateway.

- Add tags if needed.

- Click Create NAT Gateway.

-

Subnet: Select

Create a second NAT gateway but this time place it in the second public subnet, gitlab-public-10.0.2.0.

Route Tables

Public Route Table

We need to create a route table for our public subnets to reach the internet via the internet gateway we created in the previous step.

On the VPC dashboard:

- Select Route Tables from the left menu.

- Click Create Route Table.

- At the "Name tag" enter

gitlab-publicand choosegitlab-vpcunder "VPC". - Click Create.

We now need to add our internet gateway as a new target and have it receive traffic from any destination.

- Select Route Tables from the left menu and select the

gitlab-publicroute to show the options at the bottom. - Select the Routes tab, click Edit routes > Add route and set

0.0.0.0/0as the destination. In the target column, select thegitlab-gatewaywe created previously. Hit Save routes once done.

Next, we must associate the public subnets to the route table:

- Select the Subnet Associations tab and click Edit subnet associations.

- Check only the public subnets and click Save.

Private Route Tables

We also need to create two private route tables so that instances in each private subnet can reach the internet via the NAT gateway in the corresponding public subnet in the same availability zone.

- Follow the same steps as above to create two private route tables. Name them

gitlab-private-aandgitlab-private-brespectively. - Next, add a new route to each of the private route tables where the destination is

0.0.0.0/0and the target is one of the NAT gateways we created earlier.- Add the NAT gateway we created in

gitlab-public-10.0.0.0as the target for the new route in thegitlab-private-aroute table. - Similarly, add the NAT gateway in

gitlab-public-10.0.2.0as the target for the new route in thegitlab-private-b.

- Add the NAT gateway we created in

- Lastly, associate each private subnet with a private route table.

- Associate

gitlab-private-10.0.1.0withgitlab-private-a. - Associate

gitlab-private-10.0.3.0withgitlab-private-b.

- Associate

Load Balancer

We'll create a load balancer to evenly distribute inbound traffic on ports 80 and 443 across our GitLab application servers. Based the on the scaling policies we'll create later, instances will be added to or removed from our load balancer as needed. Additionally, the load balance will perform health checks on our instances.

On the EC2 dashboard, look for Load Balancer in the left navigation bar:

- Click the Create Load Balancer button.

- Choose the Classic Load Balancer.

- Give it a name (we'll use

gitlab-loadbalancer) and for the Create LB Inside option, selectgitlab-vpcfrom the dropdown menu. - In the Listeners section, set HTTP port 80, HTTPS port 443, and TCP port 22 for both load balancer and instance protocols and ports.

- In the Select Subnets section, select both public subnets from the list so that the load balancer can route traffic to both availability zones.

- We'll add a security group for our load balancer to act as a firewall to control what traffic is allowed through. Click Assign Security Groups and select Create a new security group, give it a name

(we'll use

gitlab-loadbalancer-sec-group) and description, and allow both HTTP and HTTPS traffic from anywhere (0.0.0.0/0, ::/0). Also allow SSH traffic, select a custom source, and add a single trusted IP address or an IP address range in CIDR notation. This will allow users to perform Git actions over SSH. - Click Configure Security Settings and set the following:

- Select an SSL/TLS certificate from ACM or upload a certificate to IAM.

- Under Select a Cipher, pick a predefined security policy from the dropdown. You can see a breakdown of Predefined SSL Security Policies for Classic Load Balancers in the AWS docs. Check the GitLab codebase for a list of supported SSL ciphers and protocols.

- Click Configure Health Check and set up a health check for your EC2 instances.

- For Ping Protocol, select HTTP.

- For Ping Port, enter 80.

- For Ping Path, enter

/users/sign_in. (We use/users/sign_inas it's a public endpoint that does not require authorization.) - Keep the default Advanced Details or adjust them according to your needs.

- Click Add EC2 Instances but, as we don't have any instances to add yet, come back to your load balancer after creating your GitLab instances and add them.

- Click Add Tags and add any tags you need.

- Click Review and Create, review all your settings, and click Create if you're happy.

After the Load Balancer is up and running, you can revisit your Security Groups to refine the access only through the ELB and any other requirements you might have.

Configure DNS for Load Balancer

On the Route 53 dashboard, click Hosted zones in the left navigation bar:

- Select an existing hosted zone or, if you do not already have one for your domain, click Create Hosted Zone, enter your domain name, and click Create.

- Click Create Record Set and provide the following values:

- Name: Use the domain name (the default value) or enter a subdomain.

- Type: Select A - IPv4 address.

- Alias: Defaults to No. Select Yes.

- Alias Target: Find the ELB Classic Load Balancers section and select the classic load balancer we created earlier.

- Routing Policy: We'll use Simple but you can choose a different policy based on your use case.

- Evaluate Target Health: We'll set this to No but you can choose to have the load balancer route traffic based on target health.

- Click Create.

- If you registered your domain through Route 53, you're done. If you used a different domain registrar, you need to update your DNS records with your domain registrar. You'll need to:

- Click on Hosted zones and select the domain you added above.

- You'll see a list of

NSrecords. From your domain registrar's admin panel, add each of these asNSrecords to your domain's DNS records. These steps may vary between domain registrars. If you're stuck, Google "name of your registrar" add dns records and you should find a help article specific to your domain registrar.

The steps for doing this vary depending on which registrar you use and is beyond the scope of this guide.

PostgreSQL with RDS

For our database server we will use Amazon RDS which offers Multi AZ for redundancy. First we'll create a security group and subnet group, then we'll create the actual RDS instance.

RDS Security Group

We need a security group for our database that will allow inbound traffic from the instances we'll deploy in our gitlab-loadbalancer-sec-group later on:

- From the EC2 dashboard, select Security Groups from the left menu bar.

- Click Create security group.

- Give it a name (we'll use

gitlab-rds-sec-group), a description, and select thegitlab-vpcfrom the VPC dropdown. - In the Inbound rules section, click Add rule and set the following:

- Type: search for and select the PostgreSQL rule.

- Source type: set as "Custom".

-

Source: select the

gitlab-loadbalancer-sec-groupwe created earlier.

- When done, click Create security group.

RDS Subnet Group

- Navigate to the RDS dashboard and select Subnet Groups from the left menu.

- Click on Create DB Subnet Group.

- Under Subnet group details, enter a name (we'll use

gitlab-rds-group), a description, and choose thegitlab-vpcfrom the VPC dropdown. - From the Availability Zones dropdown, select the Availability Zones that include the subnets you've configured. In our case, we'll add

eu-west-2aandeu-west-2b. - From the Subnets dropdown, select the two private subnets (

10.0.1.0/24and10.0.3.0/24) as we defined them in the subnets section. - Click Create when ready.

Create the database

DANGER: Danger: Avoid using burstable instances (t class instances) for the database as this could lead to performance issues due to CPU credits running out during sustained periods of high load.

Now, it's time to create the database:

- Navigate to the RDS dashboard, select Databases from the left menu, and click Create database.

- Select Standard Create for the database creation method.

- Select PostgreSQL as the database engine and select the minimum PostgreSQL version as defined for your GitLab version in our database requirements.

- Since this is a production server, let's choose Production from the Templates section.

- Under Settings, set a DB instance identifier, a master username, and a master password. We'll use

gitlab-db-ha,gitlab, and a very secure password respectively. Make a note of these as we'll need them later. - For the DB instance size, select Standard classes and select an instance size that meets your requirements from the dropdown menu. We'll use a

db.m4.largeinstance. - Under Storage, configure the following:

- Select Provisioned IOPS (SSD) from the storage type dropdown menu. Provisioned IOPS (SSD) storage is best suited for this use (though you can choose General Purpose (SSD) to reduce the costs). Read more about it at Storage for Amazon RDS.

- Allocate storage and set provisioned IOPS. We'll use the minimum values,

100and1000, respectively. - Enable storage autoscaling (optional) and set a maximum storage threshold.

- Under Availability & durability, select Create a standby instance to have a standby RDS instance provisioned in a different Availability Zone.

- Under Connectivity, configure the following:

- Select the VPC we created earlier (

gitlab-vpc) from the Virtual Private Cloud (VPC) dropdown menu. - Expand the Additional connectivity configuration section and select the subnet group (

gitlab-rds-group) we created earlier. - Set public accessibility to No.

- Under VPC security group, select Choose existing and select the

gitlab-rds-sec-groupwe create above from the dropdown. - Leave the database port as the default

5432.

- Select the VPC we created earlier (

- For Database authentication, select Password authentication.

- Expand the Additional configuration section and complete the following:

- The initial database name. We'll use

gitlabhq_production. - Configure your preferred backup settings.

- The only other change we'll make here is to disable auto minor version updates under Maintenance.

- Leave all the other settings as is or tweak according to your needs.

- Once you're happy, click Create database.

- The initial database name. We'll use

Now that the database is created, let's move on to setting up Redis with ElastiCache.

Redis with ElastiCache

ElastiCache is an in-memory hosted caching solution. Redis maintains its own persistence and is used to store session data, temporary cache information, and background job queues for the GitLab application.

Create a Redis Security Group

- Navigate to the EC2 dashboard.

- Select Security Groups from the left menu.

- Click Create security group and fill in the details. Give it a name (we'll use

gitlab-redis-sec-group), add a description, and choose the VPC we created previously - In the Inbound rules section, click Add rule and add a Custom TCP rule, set port

6379, and set the "Custom" source as thegitlab-loadbalancer-sec-groupwe created earlier. - When done, click Create security group.

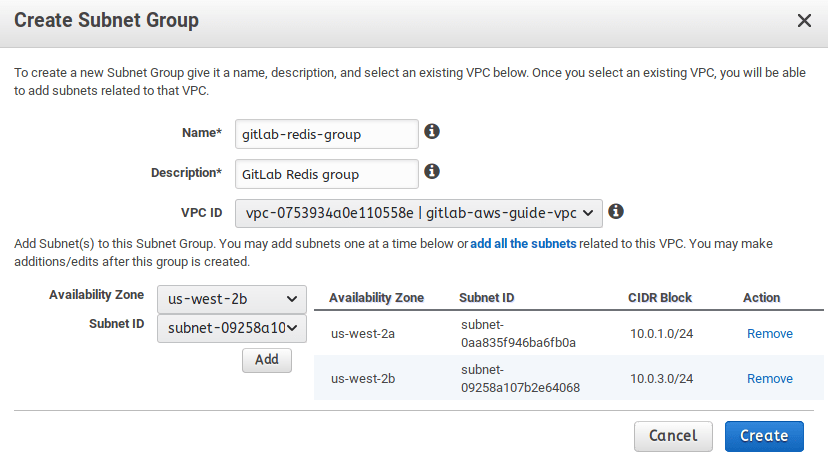

Redis Subnet Group

-

Navigate to the ElastiCache dashboard from your AWS console.

-

Go to Subnet Groups in the left menu, and create a new subnet group (we'll name ours

gitlab-redis-group). Make sure to select our VPC and its private subnets. Click Create when ready.

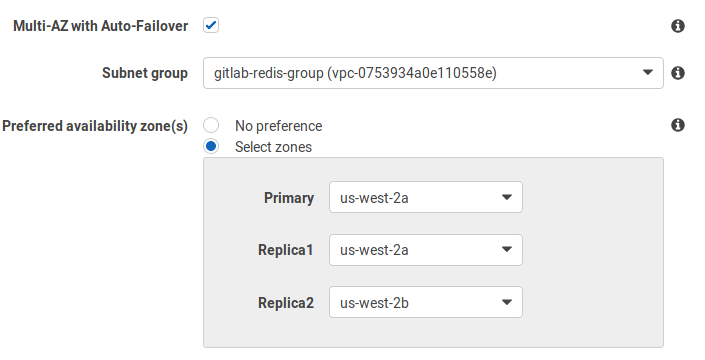

Create the Redis Cluster

-

Navigate back to the ElastiCache dashboard.

-

Select Redis on the left menu and click Create to create a new Redis cluster. Do not enable Cluster Mode as it is not supported. Even without cluster mode on, you still get the chance to deploy Redis in multiple availability zones.

-

In the settings section:

- Give the cluster a name (

gitlab-redis) and a description. - For the version, select the latest of

5.0series (e.g.,5.0.6). - Leave the port as

6379since this is what we used in our Redis security group above. - Select the node type (at least

cache.t3.medium, but adjust to your needs) and the number of replicas.

- Give the cluster a name (

-

In the advanced settings section:

-

In the security settings, edit the security groups and choose the

gitlab-redis-sec-groupwe had previously created. -

Leave the rest of the settings to their default values or edit to your liking.

-

When done, click Create.

Setting up Bastion Hosts

Since our GitLab instances will be in private subnets, we need a way to connect to these instances via SSH to make configuration changes, perform upgrades, etc. One way of doing this is via a bastion host, sometimes also referred to as a jump box.

TIP: Tip: If you do not want to maintain bastion hosts, you can set up AWS Systems Manager Session Manager for access to instances. This is beyond the scope of this document.

Create Bastion Host A

- Navigate to the EC2 Dashboard and click on Launch instance.

- Select the Ubuntu Server 18.04 LTS (HVM) AMI.

- Choose an instance type. We'll use a

t2.microas we'll only use the bastion host to SSH into our other instances. - Click Configure Instance Details.

- Under Network, select the

gitlab-vpcfrom the dropdown menu. - Under Subnet, select the public subnet we created earlier (

gitlab-public-10.0.0.0). - Double check that under Auto-assign Public IP you have Use subnet setting (Enable) selected.

- Leave everything else as default and click Add Storage.

- Under Network, select the

- For storage, we'll leave everything as default and only add an 8GB root volume. We won't store anything on this instance.

- Click Add Tags and on the next screen click Add Tag.

- We’ll only set

Key: NameandValue: Bastion Host A.

- We’ll only set

- Click Configure Security Group.

- Select Create a new security group, enter a Security group name (we'll use

bastion-sec-group), and add a description. - We'll enable SSH access from anywhere (

0.0.0.0/0). If you want stricter security, specify a single IP address or an IP address range in CIDR notation. - Click Review and Launch

- Select Create a new security group, enter a Security group name (we'll use

- Review all your settings and, if you're happy, click Launch.

- Acknowledge that you have access to an existing key pair or create a new one. Click Launch Instance.

Confirm that you can SSH into the instance:

- On the EC2 Dashboard, click on Instances in the left menu.

- Select Bastion Host A from your list of instances.

- Click Connect and follow the connection instructions.

- If you are able to connect successfully, let's move on to setting up our second bastion host for redundancy.

Create Bastion Host B

- Create an EC2 instance following the same steps as above with the following changes:

- For the Subnet, select the second public subnet we created earlier (

gitlab-public-10.0.2.0). - Under the Add Tags section, we’ll set

Key: NameandValue: Bastion Host Bso that we can easily identify our two instances. - For the security group, select the existing

bastion-sec-groupwe created above.

- For the Subnet, select the second public subnet we created earlier (

Use SSH Agent Forwarding

EC2 instances running Linux use private key files for SSH authentication. You'll connect to your bastion host using an SSH client and the private key file stored on your client. Since the private key file is not present on the bastion host, you will not be able to connect to your instances in private subnets.

Storing private key files on your bastion host is a bad idea. To get around this, use SSH agent forwarding on your client. See Securely Connect to Linux Instances Running in a Private Amazon VPC for a step-by-step guide on how to use SSH agent forwarding.

Install GitLab and create custom AMI

We will need a preconfigured, custom GitLab AMI to use in our launch configuration later. As a starting point, we will use the official GitLab AMI to create a GitLab instance. Then, we'll add our custom configuration for PostgreSQL, Redis, and Gitaly. If you prefer, instead of using the official GitLab AMI, you can also spin up an EC2 instance of your choosing and manually install GitLab.

Install GitLab

From the EC2 dashboard:

- Click Launch Instance and select Community AMIs from the left menu.

- In the search bar, search for

GitLab EE <version>where<version>is the latest version as seen on the releases page. Select the latest patch release, for exampleGitLab EE 12.9.2. - Select an instance type based on your workload. Consult the hardware requirements to choose one that fits your needs (at least

c5.xlarge, which is sufficient to accommodate 100 users). - Click Configure Instance Details:

- In the Network dropdown, select

gitlab-vpc, the VPC we created earlier. - In the Subnet dropdown, select

gitlab-private-10.0.1.0from the list of subnets we created earlier. - Double check that Auto-assign Public IP is set to

Use subnet setting (Disable). - Click Add Storage.

- The root volume is 8GiB by default and should be enough given that we won’t store any data there.

- In the Network dropdown, select

- Click Add Tags and add any tags you may need. In our case, we'll only set

Key: NameandValue: GitLab. - Click Configure Security Group. Check Select an existing security group and select the

gitlab-loadbalancer-sec-groupwe created earlier. - Click Review and launch followed by Launch if you’re happy with your settings.

- Finally, acknowledge that you have access to the selected private key file or create a new one. Click Launch Instances.

Add custom configuration

Connect to your GitLab instance via Bastion Host A using SSH Agent Forwarding. Once connected, add the following custom configuration:

Disable Let's Encrypt

Since we're adding our SSL certificate at the load balancer, we do not need GitLab's built-in support for Let's Encrypt. Let's Encrypt is enabled by default when using an https domain since GitLab 10.7, so we need to explicitly disable it:

-

Open

/etc/gitlab/gitlab.rband disable it:letsencrypt['enable'] = false -

Save the file and reconfigure for the changes to take effect:

sudo gitlab-ctl reconfigure

Install the pg_trgm extension for PostgreSQL

From your GitLab instance, connect to the RDS instance to verify access and to install the required pg_trgm extension.

To find the host or endpoint, navigate to Amazon RDS > Databases and click on the database you created earlier. Look for the endpoint under the Connectivity & security tab.

Do not to include the colon and port number:

sudo /opt/gitlab/embedded/bin/psql -U gitlab -h <rds-endpoint> -d gitlabhq_productionAt the psql prompt create the extension and then quit the session:

psql (10.9)

Type "help" for help.

gitlab=# CREATE EXTENSION pg_trgm;

gitlab=# \qConfigure GitLab to connect to PostgreSQL and Redis

-

Edit

/etc/gitlab/gitlab.rb, find theexternal_url 'http://<domain>'option and change it to thehttpsdomain you will be using. -

Look for the GitLab database settings and uncomment as necessary. In our current case we'll specify the database adapter, encoding, host, name, username, and password:

# Disable the built-in Postgres postgresql['enable'] = false # Fill in the connection details gitlab_rails['db_adapter'] = "postgresql" gitlab_rails['db_encoding'] = "unicode" gitlab_rails['db_database'] = "gitlabhq_production" gitlab_rails['db_username'] = "gitlab" gitlab_rails['db_password'] = "mypassword" gitlab_rails['db_host'] = "<rds-endpoint>" -

Next, we need to configure the Redis section by adding the host and uncommenting the port:

# Disable the built-in Redis redis['enable'] = false # Fill in the connection details gitlab_rails['redis_host'] = "<redis-endpoint>" gitlab_rails['redis_port'] = 6379 -

Finally, reconfigure GitLab for the changes to take effect:

sudo gitlab-ctl reconfigure -

You might also find it useful to run a check and a service status to make sure everything has been setup correctly:

sudo gitlab-rake gitlab:check sudo gitlab-ctl status

Set up Gitaly

CAUTION: Caution: In this architecture, having a single Gitaly server creates a single point of failure. This limitation will be removed once Gitaly Cluster is released.

Gitaly is a service that provides high-level RPC access to Git repositories. It should be enabled and configured on a separate EC2 instance in one of the private subnets we configured previously.

Let's create an EC2 instance where we'll install Gitaly:

- From the EC2 dashboard, click Launch instance.

- Choose an AMI. In this example, we'll select the Ubuntu Server 18.04 LTS (HVM), SSD Volume Type.

- Choose an instance type. We'll pick a c5.xlarge.

- Click Configure Instance Details.

- In the Network dropdown, select

gitlab-vpc, the VPC we created earlier. - In the Subnet dropdown, select

gitlab-private-10.0.1.0from the list of subnets we created earlier. - Double check that Auto-assign Public IP is set to

Use subnet setting (Disable). - Click Add Storage.

- In the Network dropdown, select

- Increase the Root volume size to

20 GiBand change the Volume Type toProvisoned IOPS SSD (io1). (This is an arbitrary size. Create a volume big enough for your repository storage requirements.)- For IOPS set

1000(20 GiB x 50 IOPS). You can provision up to 50 IOPS per GiB. If you select a larger volume, increase the IOPS accordingly. Workloads where many small files are written in a serialized manner, likegit, requires performant storage, hence the choice ofProvisoned IOPS SSD (io1).

- For IOPS set

- Click on Add Tags and add your tags. In our case, we'll only set

Key: NameandValue: Gitaly. - Click on Configure Security Group and let's Create a new security group.

- Give your security group a name and description. We'll use

gitlab-gitaly-sec-groupfor both. - Create a Custom TCP rule and add port

8075to the Port Range. For the Source, select thegitlab-loadbalancer-sec-group. - Also add an inbound rule for SSH from the

bastion-sec-groupso that we can connect using SSH Agent Forwarding from the Bastion hosts.

- Give your security group a name and description. We'll use

- Click Review and launch followed by Launch if you're happy with your settings.

- Finally, acknowledge that you have access to the selected private key file or create a new one. Click Launch Instances.

NOTE: Optional: Instead of storing configuration and repository data on the root volume, you can also choose to add an additional EBS volume for repository storage. Follow the same guidance as above. See the Amazon EBS pricing. We do not recommend using EFS as it may negatively impact GitLab’s performance. You can review the relevant documentation for more details.

Now that we have our EC2 instance ready, follow the documentation to install GitLab and set up Gitaly on its own server. Perform the client setup steps from that document on the GitLab instance we created above.

Add Support for Proxied SSL

As we are terminating SSL at our load balancer, follow the steps at Supporting proxied SSL to configure this in /etc/gitlab/gitlab.rb.

Remember to run sudo gitlab-ctl reconfigure after saving the changes to the gitlab.rb file.

Fast lookup of authorized SSH keys

The public SSH keys for users allowed to access GitLab are stored in /var/opt/gitlab/.ssh/authorized_keys. Typically we'd use shared storage so that all the instances are able to access this file when a user performs a Git action over SSH. Since we do not have shared storage in our setup, we'll update our configuration to authorize SSH users via indexed lookup in the GitLab database.

Follow the instructions at Setting up fast lookup via GitLab Shell to switch from using the authorized_keys file to the database.

If you do not configure fast lookup, Git actions over SSH will result in the following error:

Permission denied (publickey).

fatal: Could not read from remote repository.

Please make sure you have the correct access rights

and the repository exists.Configure host keys

Ordinarily we would manually copy the contents (primary and public keys) of /etc/ssh/ on the primary application server to /etc/ssh on all secondary servers. This prevents false man-in-the-middle-attack alerts when accessing servers in your cluster behind a load balancer.

We'll automate this by creating static host keys as part of our custom AMI. As these host keys are also rotated every time an EC2 instance boots up, "hard coding" them into our custom AMI serves as a handy workaround.

On your GitLab instance run the following:

sudo mkdir /etc/ssh_static

sudo cp -R /etc/ssh/* /etc/ssh_staticIn /etc/ssh/sshd_config update the following:

# HostKeys for protocol version 2

HostKey /etc/ssh_static/ssh_host_rsa_key

HostKey /etc/ssh_static/ssh_host_dsa_key

HostKey /etc/ssh_static/ssh_host_ecdsa_key

HostKey /etc/ssh_static/ssh_host_ed25519_keyAmazon S3 object storage

Since we're not using NFS for shared storage, we will use Amazon S3 buckets to store backups, artifacts, LFS objects, uploads, merge request diffs, container registry images, and more. Our documentation includes instructions on how to configure object storage for each of these data types, and other information about using object storage with GitLab.

NOTE: Note:

Since we are using the AWS IAM profile we created earlier, be sure to omit the AWS access key and secret access key/value pairs when configuring object storage. Instead, use 'use_iam_profile' => true in your configuration as shown in the object storage documentation linked above.

Remember to run sudo gitlab-ctl reconfigure after saving the changes to the gitlab.rb file.

NOTE: Note: One current feature of GitLab that still requires a shared directory (NFS) is GitLab Pages. There is work in progress to eliminate the need for NFS to support GitLab Pages.

That concludes the configuration changes for our GitLab instance. Next, we'll create a custom AMI based on this instance to use for our launch configuration and auto scaling group.

Create custom AMI

On the EC2 dashboard:

- Select the

GitLabinstance we created earlier. - Click on Actions, scroll down to Image and click Create Image.

- Give your image a name and description (we'll use

GitLab-Sourcefor both). - Leave everything else as default and click Create Image

Now we have a custom AMI that we'll use to create our launch configuration the next step.

Deploy GitLab inside an auto scaling group

Create a launch configuration

From the EC2 dashboard:

- Select Launch Configurations from the left menu and click Create launch configuration.

- Select My AMIs from the left menu and select the

GitLabcustom AMI we created above. - Select an instance type best suited for your needs (at least a

c5.xlarge) and click Configure details. - Enter a name for your launch configuration (we'll use

gitlab-ha-launch-config). - Do not check Request Spot Instance.

- From the IAM Role dropdown, pick the

GitLabAdmininstance role we created earlier. - Leave the rest as defaults and click Add Storage.

- The root volume is 8GiB by default and should be enough given that we won’t store any data there. Click Configure Security Group.

- Check Select and existing security group and select the

gitlab-loadbalancer-sec-groupwe created earlier. - Click Review, review your changes, and click Create launch configuration.

- Acknowledge that you have access to the private key or create a new one. Click Create launch configuration.

Create an auto scaling group

- As soon as the launch configuration is created, you'll see an option to Create an Auto Scaling group using this launch configuration. Click that to start creating the auto scaling group.

- Enter a Group name (we'll use

gitlab-auto-scaling-group). - For Group size, enter the number of instances you want to start with (we'll enter

2). - Select the

gitlab-vpcfrom the Network dropdown. - Add both the private subnets we created earlier.

- Expand the Advanced Details section and check the Receive traffic from one or more load balancers option.

- From the Classic Load Balancers dropdown, select the load balancer we created earlier.

- For Health Check Type, select ELB.

- We'll leave our Health Check Grace Period as the default

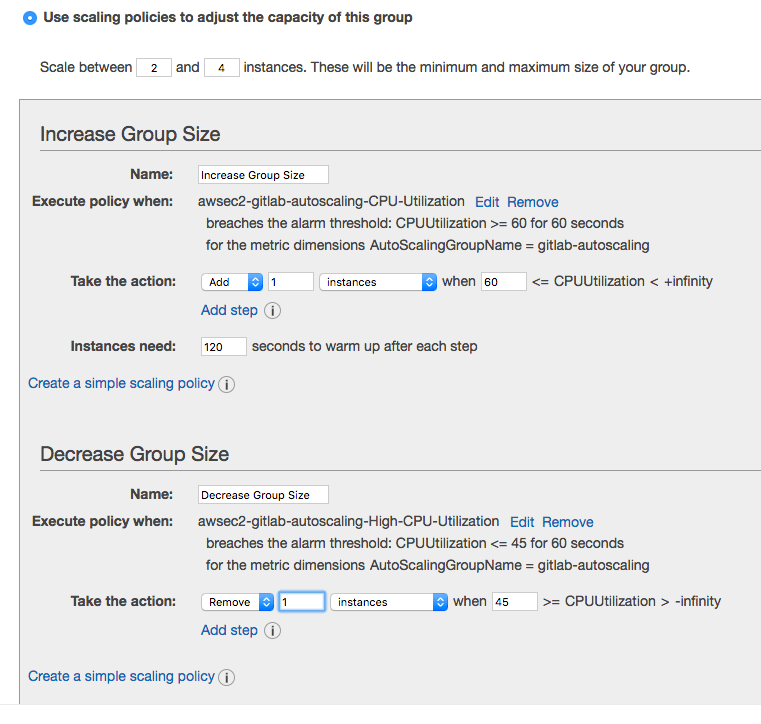

300seconds. Click Configure scaling policies. - Check Use scaling policies to adjust the capacity of this group.

- For this group we'll scale between 2 and 4 instances where one instance will be added if CPU utilization is greater than 60% and one instance is removed if it falls to less than 45%.

- Finally, configure notifications and tags as you see fit, review your changes, and create the auto scaling group.

As the auto scaling group is created, you'll see your new instances spinning up in your EC2 dashboard. You'll also see the new instances added to your load balancer. Once the instances pass the heath check, they are ready to start receiving traffic from the load balancer.

Since our instances are created by the auto scaling group, go back to your instances and terminate the instance we created manually above. We only needed this instance to create our custom AMI.

Log in for the first time

Using the domain name you used when setting up DNS for the load balancer, you should now be able to visit GitLab in your browser. The very first time you will be asked to set up a password

for the root user which has admin privileges on the GitLab instance.

After you set it up, login with username root and the newly created password.

Health check and monitoring with Prometheus

Apart from Amazon's Cloudwatch which you can enable on various services, GitLab provides its own integrated monitoring solution based on Prometheus. For more information on how to set it up, visit the GitLab Prometheus documentation

GitLab also has various health check endpoints that you can ping and get reports.

GitLab Runners

If you want to take advantage of GitLab CI/CD, you have to set up at least one GitLab Runner.

Read more on configuring an autoscaling GitLab Runner on AWS.

Backup and restore

GitLab provides a tool to back up and restore its Git data, database, attachments, LFS objects, and so on.

Some important things to know:

- The backup/restore tool does not store some configuration files, like secrets; you'll need to configure this yourself.

- By default, the backup files are stored locally, but you can backup GitLab using S3.

- You can exclude specific directories form the backup.

Backing up GitLab

To back up GitLab:

-

SSH into your instance.

-

Take a backup:

sudo gitlab-backup create

NOTE: Note

For GitLab 12.1 and earlier, use gitlab-rake gitlab:backup:create.

Restoring GitLab from a backup

To restore GitLab, first review the restore documentation, and primarily the restore prerequisites. Then, follow the steps under the Omnibus installations section.

Updating GitLab

GitLab releases a new version every month on the 22nd. Whenever a new version is released, you can update your GitLab instance:

-

SSH into your instance

-

Take a backup:

sudo gitlab-backup create

NOTE: Note

For GitLab 12.1 and earlier, use gitlab-rake gitlab:backup:create.

-

Update the repositories and install GitLab:

sudo apt update sudo apt install gitlab-ee

After a few minutes, the new version should be up and running.

Conclusion

In this guide, we went mostly through scaling and some redundancy options, your mileage may vary.

Keep in mind that all solutions come with a trade-off between cost/complexity and uptime. The more uptime you want, the more complex the solution. And the more complex the solution, the more work is involved in setting up and maintaining it.

Have a read through these other resources and feel free to open an issue to request additional material:

- Scaling GitLab: GitLab supports several different types of clustering.

- Geo replication: Geo is the solution for widely distributed development teams.

- Omnibus GitLab - Everything you need to know about administering your GitLab instance.

- Upload a license: Activate all GitLab Enterprise Edition functionality with a license.

- Pricing: Pricing for the different tiers.